During a recent project with Azure Service Fabric, I encountered errors after provisioning which prevented proper SF operation, and ultimately led to an interesting discovery.

Service Fabric is Really IaaS

Azure Service Fabric is essentially just an intelligently managed IaaS environment. Many parts of an IaaS environment are inherently static, that is, once a particular performance level is provisioned, it remains at that perf level.

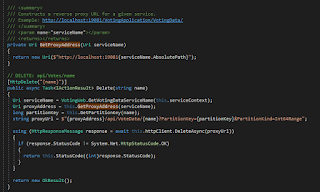

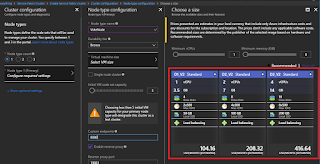

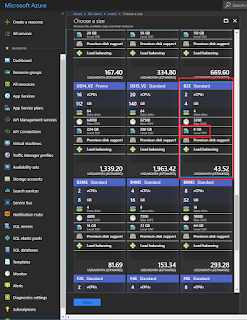

During Service Fabric provisioning, a choice of VM size is offered, at which time it's very important to get the size right (it should be sized for anticipated

peak load). Too big and you'll waste money; too small and

the environment won't operate properly, or will yield poor performance. Changing VM size later can cause service interruptions and is generally not recommended. The VM size is applied to all nodes (VMs) of a scale set (or

cluster) that's created by SF provisioning. The scale set is initially set to a fixed size that can be manually changed, and later be configured for automatic scaling.

Being Frugal

In my personal Azure test environment, I typically provision lower-end VM offerings to save money on small, short projects. This works fine for small apps with no load beyond my own experimentation. I do my project, then tear-down the environment, or if it's something I intended to keep, I switch it to a free tier.

|

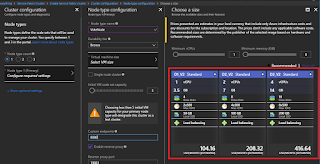

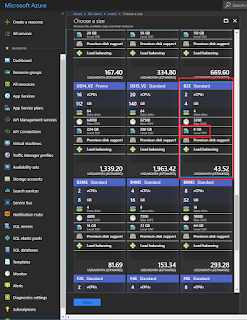

| VM Sizes Offered During Service Fabric Provisioning |

When it came time to build a Service Fabric environment, I used the same economical approach. Rather than choose the suggested VM at a cost of $104/VM/month, I chose a smaller one at $44/VM/month. The portal's UI allowed this choice, and during the validation phase it passed successfully. But all was not well, as I was to see.

If You Build It, They Will [not] Come

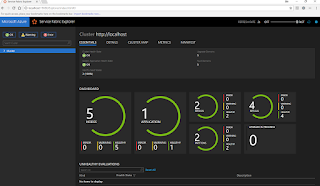

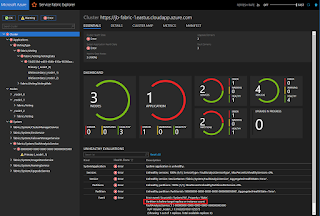

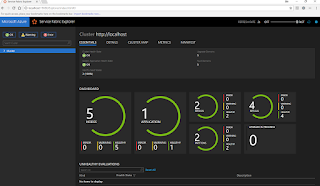

After about 30 minutes for the new SF environment to be created and brought online, I checked the results via the SF monitoring web page. This page is created automatically when a SF environment is created, and by default is available at

http://<your URL>:19080/Explorer/index.html#/. Here's what that looks like with a local 5-node setup.

|

| Service Fabric Monitor - Normal |

But, for some reason, my brand-new Azure-based Service Fabric booted with warnings, and when I ran my SF app, the environment quickly went into failure. I wasn't too pleased - isn't Azure technology supposed to be better than that, and self-heal in this case?

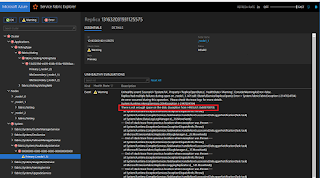

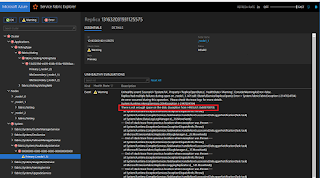

Digging-down in the SF Monitor, I noticed a single status line that gave insight to the failure - a VM disk was too full, despite having just been provisioned. As a side-note, Service Fabric VM disks are now configured as

Managed Disks, an improvement over legacy disks that required traditional monitoring and oversight; Microsoft takes care of that now.

|

| Disk Full |

A disk-full issue is normally fairly easy to resolve, but the bigger question is how could a brand-new SF installation yield such a condition, and especially with Microsoft-managed disks? My app hadn't even started yet. Logging-in to one of the VMs showed 100 GB of free space on the C: system drive, and several GB free on the temporary D: drive. The disks weren't full.

In short order, the disk-full warning caused a node failure, and that escalated into partial cluster outage and lots of red on the screen. The error message "

Partition is below target replica or instance count" is a verbose way of saying that a node is down, which implies that the node failed due to environment or startup code.

|

| Node failure Caused by Full Disk |

Oh Yah, RTFM

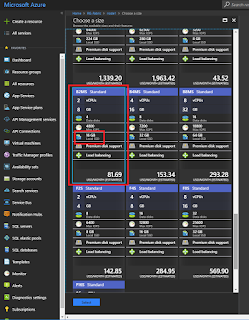

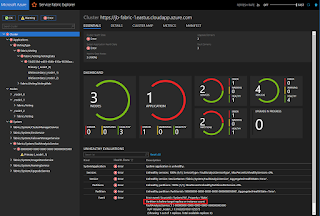

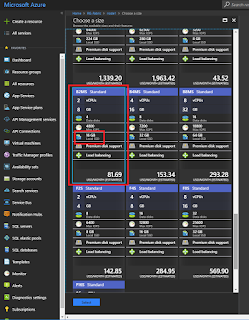

After some non-trivial research I discovered a clue. Microsoft's Service Fabric capacity planning recommendations state a requirement of a 14GB "local" disk. The word "local" isn't well defined; my little VM had a 128GB "local" system disk, and a 8GB "local" temp disk. Nonetheless, I made the inferential jump that my 8GB disk - despite having several GB of available space - was too small.

|

| Too-Small Local Disk in Selected VM SKU |

Even though my application wasn't going to touch the D: temp drive, I rightly guessed that Service Fabric had intentions for its use, and thus the implied requirement that the size be larger. I wish they'd just stated that explicitly.

Fixing It

To check my hunch, I up-sized the VM SKU, as seen below. I didn't care about a service interruption, but since this change takes down the cluster, it explains the critical guidance to pick the right (peak-load) VM SKU during provisioning:

|

| Just-Right Local Disk of New VM SKU |

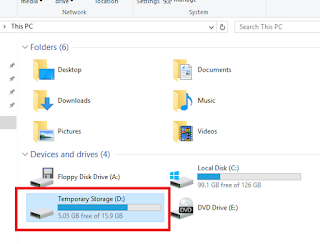

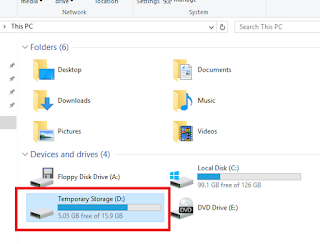

With the change made and several minutes later, I logged into one of my SF cluster's VMs and noted the disk space consumed by the D: temp drive. Notice in the image below that Service Fabric has used about 10GB, even before my app started running. So there's the 14GB justification - and you can guess what happens if D: is only 8GB.

|

| VM Local Storage Free Space |

At last, my Service Fabric cluster was fully operational and without error!

Lessons learned

- Even if you know how to do something, double-check the documentation for tiny details that could derail your project.

- Even if Azure "validates" your configuration, it could still be invalid and non-functional.

- In certain parts of the Azure ecosystem, changing a resource configuration can take the environment down for a period. Not all Azure components - even those in pseudo fault-tolerant scenarios - continue operating during a reconfiguration.