|

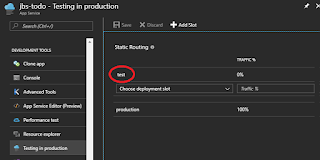

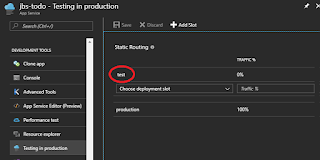

| Adding a new slot for TiP testing |

TiP (Testing in Production) is an Azure feature allowing live, in-production testing of web apps. TiP routes a configurable portion of normal inbound production traffic to an app slot containing a new version of an app. You watch the app's performance and logs to see if all is well. I suppose this could be called Gamma testing, since it occurs after Beta testing but not yet taking a full production load. This approach to semi-production testing works well so long as data schemas are compatible between the two

versions.

Does TiP work? Well yes, it provides a useful and simple way to check a new software release prior to going fully live with it. That's business value. But there's a hitch at present that messes-up your app's configuration, causing 404's whose rectification requires (for now, anyway), deleting and redeploying the app and enduring a service outage. Oops.

|

| Slot configuration after creation |

TiP is available to any app that can have slots - Standard or Premium level App Service, etc. Upon setup, TiP will create a new slot and install static traffic routing, using weighted-mode traffic distribution. You decide how much traffic goes to the test slot, from 0 to 100%. The traffic manager is not directly visible via the portal, but the slot appears as a new Web App per usual, and

is visible.

Once TiP warms-up, a percentage of clients will get a cookie that when submitted to Azure on a subsequent request, will direct their traffic to the test slot. That's a nice approach, until it isn't.

Cookie: [x-ms-routing-name: your-test-slot-name]

Failure Mode 1 - TiP Cookie Persistence gives 404s

|

| HTTP 404 with x-ms-routing-name cookie |

If you've completed TiP testing and decide to tear-down the test environment - a perfectly normal thing to do in a cloud deployment - you're left with an issue. Clients with the slot cookie will continue to present it, but the traffic routing won't know about the slot anymore. An

HTTP 404 Not Found error is the result. See the Postman screen shot.

The solution is for the client to drop the

x-ms-routing-name cookie. That's no problem if you're testing locally, with full control of clients and cookies, but what about the real production clients, i.e. your customers? Ergo Oops.

After removing the cookie, here's the new result in Postman -

HTTP 200 OK. Much better.

|

| HTTP 200 OK after removing cookie |

Failure Mode 2 - The Story of a Mucked-up Web App

There's no clear way to comprehensively remove TiP artifacts via the Azure portal, so when I completed testing, I just deleted the slot and the slot's Web App manually. That stopped those billing charges. As mentioned before, the implied existence of a static traffic routing didn't leave a visible artifact (such as a router, load balancer, etc.), so I couldn't take any action on it.

|

| Failure creating new slot |

A bit later, I decided to do another TiP session, but could no longer make new TiP slots using the original Web App as configuration source (this is an option during slot creation - see image above). Attempting to do so yielded this error message.

Figuring that there was possibly some "dirty" JSON configuration somewhere, I searched all the config files I could find, but couldn't locate "test" - the slot's name - in any of them.

Looking for a work-around and based on knowing a wee bit about how software works, I tried creating another slot using a different name ("

anyone" - see image) and for Configuration Source, I chose

Don't clone configuration from an existing web app. This worked, or so I thought. The slot got created and I could see its resources in the portal, just as before.

|

| New slot configuration |

I configured the new slot for traffic, fixed my Postman session as explained above, and fired-off a new POST. Got a 404; what?! I confirmed that the slot was indeed up by using the direct URL to it; only the request to the primary URL (and through static traffic routing) got the 404. This didn't make any sense - all web apps were up and running, and the cookie was set properly.

Keep in mind that this broken configuration, and the 404, was

in live production.

At this point, TiP testing is hosed for the Web App, so I reset test traffic to 100% for the original Web App, and 0% for the broken slot. Then I removed slot resources as done above. This restored normal production operation, except for the cookie issue mentioned above, which likely means that static traffic routing is still trying to function.

As a side note, the 404s seem to come from IIS, based on header information I saw, even if the app uses Kestrel. TiP apparently puts some infrastructure between the client and the web app, and that's likely what generates the cookies too. It's not entirely possible to discern this magic invisible infrastructure, but enough symptoms emit to yield a good guess.

How to fully fix the Web App

Desiring to restore the Web App completely, and rid the configuration of any bad settings, I found no other solution than to

delete the app (but leave the Web App Plan). Then I recreated it, reconnected it to source control, and triggered a redeploy. This got everything back in a few minutes for this very small test. Having source-control based deployment is really nice for this case and it nearly automated the restore. Nonetheless, this approach did cause a brief service outage - in production.

I'm hoping that this entire behavior is a bug that'll one day be fixed, but for the meantime you might just want to consider testing the good old-fashioned way -

not in production.

References