The past couple days, I performed a "lab" experiment using

Azure Site Recovery. This is the feature that, amongst other things, lets you replicate on-premise Hyper-V virtual machines to Azure.

ASR is a component of a disaster recovery / business continuity plan that enables running Hyper-V loads in Azure, after a brief fail-over period. Once ASR is initially set up, one click "fails-over" the network to use a newly-provisioned Azure virtual machine to continue operations. Data from on-premise VM disks gets replicated up to an Azure storage account frequently, minimizing any lost data.

RPO and

RTO objectives can be met for modest requirements, if not for transactional workloads.

You can also use this technique to migrate on-premise VMs to an Azure

IaaS setup, though it's a funky approach and I'm not sure I'd really recommend it.

My home office computing environment is flexible and provides a platform for modeling and experimenting with a Windows Server 2012R2 domain, Hyper-V, networks, databases, and so on. I created a test VM to use for the experiment, which was expendable upon experiment completion. I figured that I'd spend a day setting it up, playing with it, and then tearing it all back down.

That was the plan, anyway. But it didn't work out that way.

Sure, I watched the videos and read the documentation. I checked the prerequisites. And, the Azure management portal is generally pretty good at guiding administrators through a sequence of steps to setup most any resource. Using these three sources, and my good general knowledge of all the technology and architecture involved, I figured it wouldn't be too hard to do.

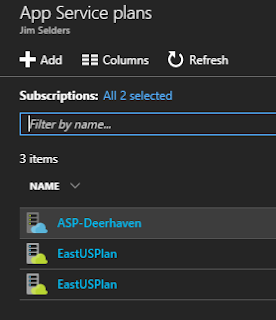

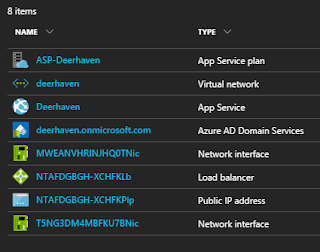

I started in the Azure portal, setting up resources using the Resource Group approach (i.e., not Classic). Setup steps include creating a backup vault, storage account, and virtual network. The storage account holds the replicated VM's disks, and the virtual network is where the Azure VM will connect after a fail-over. But you don't make a VM, as you'd pay quite a bit for that resource even if it's never used. Instead, Azure will automatically provision a VM upon fail-over; it'll select a best-match for the "hardware" configuration of your Hyper-V VM (this increases RTO by a few minutes, in exchange for strongly reduced costs).

Hurdle 1 - Not on a DC

The first step for preparing on-premise machines is to install an ASR agent on the Hyper-V host, obtained via the Azure portal. This is the computer that actually runs the VMs you'd like to protect.

This is when issue #1 appeared. It's not documented at present, but you cannot run the ASR agent on a domain controller. Yep, in my small office, I have a DC doing overtime by running several functions that aren't normally setup that way in a commercial environment. But in my low-load environment, it works fine. This is where my VMs were running, but thanks to this restriction, I was unable to proceed. Dead-end on that computer.

|

| ASR error message |

Fortunately I have a new laptop that functions as a backup DC and Hyper-V host, and is even more powerful than my older desktop/server machine, so I was able to demote it and install the agent. Annoying, but not a deal-breaker. It just meant that I was running without a domain backup for a day - a minor risk that I was willing to take, to appease somebody in Redmond.

Hurdle 2 - Not during the summer

Once the agent was installed on a Hyper-V host, the next step was to download a configuration file from the Azure portal and supply it to the ASR setup utility. Trivial, right?

No.

Turns out that ASR setup checks time-of-day accuracy of the host before proceeding. I could speculate why it does this, but that doesn't matter. With the host computer's timezone set to "(UTC-05:00) Eastern Time (US & Canada)", but Daylight Savings Time in effect (which gives an actual offset of UTC-4:00), ASR

insisted that my time was wrong, and therefore it would not proceed. I keep my computers (and clocks, etc.) within 1 second of

correct time, so I knew this was a bogus "error".

I've never seen such an error from any software before. The interweb is full of problem reports related to this issue, and even a couple years after those complaints, the issue still isn't fixed. Maybe they only test in Redmond during the winter, when actual time offsets match the configured timezone region?

Anyway, the work-around is easy enough: change the hosts's timezone to "(UTC-04:00) Atlantic Time (Canada)", then set my clock to the current local time where it's sitting. Problem resolved, and now I could continue with configuration. Also had to remember for later, to reset the computer's timezone.

Hurdle 3 - Not in a paired region

After getting the ASR Agent installed, configured, and running OK on the on-prem Hyper-V host, I continued setup within the Azure portal. In short order I successfully completed that, and clicked that wonderful button labeled "Enable replication".

Success! Or so I thought.

That's when the next hurdle in sequence appeared. If you imagine that I might be getting tired of getting blocked by setup problems, you'd be right.

The problem was the Azure region I had selected for the Azure VM replica. I normally place Azure assets in East US, so I chose West US for the replicated VM and disks. This is due to

region pairing. Well, an error appeared in the portal indicating that West US wasn't allowed (along with certain others).

Of course, I'd already configured a virtual network and storage account in the disallowed region, and now I had to tear them down and rebuild in another acceptable region (moving those resources to another region wasn't an available portal option). I chose West US 2, rebuilt assets, and retried the replication.

Finally, replication started working.

At this point I thought it'd just take some time, and the replicated disks and VM would be ready for fail-over testing in Azure. A nice accomplishment, though with much time wasted bumping into problems that weren't documented, or were bugs. Actual experimenting like this - a Proof Of Concept - is where you discover the difference between the lovely videos and marketing info, and how something really works.

Epilogue

Lest you think I was successful with ASR after all the above, there was one last issue that broke the whole deal.

When replication of my on-premise disks had reached 98%, it stopped with an error in the Azure portal UI. The message was too general to be actionable - something about failure accessing Azure storage - and to check the Hyper-V host's event logs to find details. I did that, and found no information.

I also searched for how to restart replication as the error message further suggested, but alas could never find how to do it in the portal.

So after many hours, configuration changes, and trials, I decided to terminate the experiment. I reset the host computer's timezone, removed the ASR software, and re-promoted it to a DC. Chalk it up to learning, and move on.

After resetting the host computer, I later discovered that the replication restart for which I'd searched was in the host's Hyper-V management tool, via right-click of the appropriate VM. In other words, VM replication uses the mechanism within Windows Server 2012R2 Hyper-V, and not something in the Azure agent. Would've been nice if the error message in the Azure portal had mentioned that, since most other replication setup was in the portal, leading one to assume that's where the restart function lived.

And lastly, about that VM I was trying to replicate? I'd made sure to use a scrappable VM in case something happened, which it did. Even after the config tear-down, that VM was still configured to replicate to Azure. There was no way to disable it - the Hyper-V management tool said that replication had been enabled by another program, and that program must be used. So my VM was in an undesirable state (though it still apparently worked for the time being). The solution? Delete the VM.